Gone were the days of relying on a trial and error method for carving the path of a company’s growth and success. Companies nowadays have started adopting an optimized method for prime distribution of resources. These companies are now implementing more strategic techniques of big data analysis. And these techniques are better ways to extract useful data and uncover patterns which could support proper decision making. Small and big businesses are using these techniques which form the core of big data analytics to obtain the best possible outcomes for their companies.

Big data analytics is a hybrid of several processing methods and techniques. This analytics are found effective through the companies’ collective use to acquire significant results that they can use as data to improve management. Here are the 10 key big data technologies used by both small and large companies.

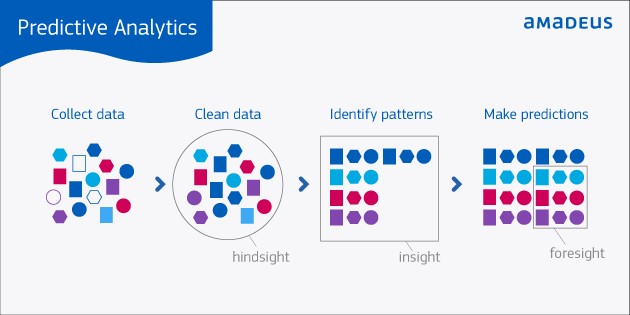

Avoid Making Mistakes thru Predictive Analytics

Predictive analytics helps businesses to avert the risks associated with the decision-making process. Predictive analytics hardware and software solutions can be employed for analysis, decision-making, and distribution of scenarios through big data processing. Big data can help in predicting what may happen, and help solve any potential problems through analysis.

Predictive analytics helps businesses to avert the risks associated with the decision-making process. Predictive analytics hardware and software solutions can be employed for analysis, decision-making, and distribution of scenarios through big data processing. Big data can help in predicting what may happen, and help solve any potential problems through analysis.

Distributed Data Storage

Distributed storage contains replicated data that are useful in cases of failing independent nodes and big data loss or corruption. Sometimes replicating data could also be used with low-latency speedy connection on large networks.

Data Virtualization

Data virtualization is one of the widely used big data technologies. TIBCO defines data virtualization as “an agile data integration approach organization use to gain more insight from their data.” Apache Hadoop uses data virtualization and other distributed data stores for instantaneous access to big data on several platforms worldwide. Data virtualization simply permits applications to retrieve data without instigating technical restrictions such as data formats, the physical location of data, etc.

Data Integration

Processing too large data in a manner that can be useful for each department is a big operational challenge for most organizations. In this case, big data integration tools are very beneficial as these let businesses to consolidate data across different big data companies like Apache Pig, Amazon EMR, Hadoop, MongoDB, and MapReduce.

Processing too large data in a manner that can be useful for each department is a big operational challenge for most organizations. In this case, big data integration tools are very beneficial as these let businesses to consolidate data across different big data companies like Apache Pig, Amazon EMR, Hadoop, MongoDB, and MapReduce.

Data Pre-processing

Data Preprocessing is a data preparation tool that is commonly used to manipulate data into a consistent format that can be utilized for analysis. This tool can also format and clean unstructured data sets which in turn accelerates data sharing. However, this technology has a limitation – it cannot automate all of its tasks; hence it requires human oversight — making it strenuous and time-consuming.

Big data Quality

Data quality is another important parameter of processing big data. A data quality program is widely utilized for gathering cohesive and decisive outputs from big data that was gathered and processed. This could also perform cleansing and enrichment of large data sets through the utilization of parallel processing.

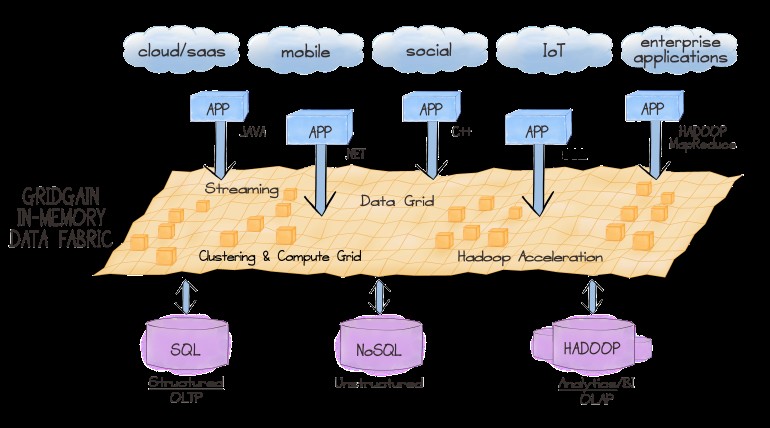

In-memory Data Fabric

In-memory Data Fabric aids in the dissemination of big quantities of data on various system resources like a Flash Storage, Dynamic RAM, or SSDs, more commonly known as Solid State Storage Drives. This step will, in turn, allow big data processing connected nodes and low-latency access and

In-memory Data Fabric aids in the dissemination of big quantities of data on various system resources like a Flash Storage, Dynamic RAM, or SSDs, more commonly known as Solid State Storage Drives. This step will, in turn, allow big data processing connected nodes and low-latency access and

NoSQL Database

NoSQL Databases are widely utilized for efficient and reliable data management across a huge network of storage nodes. In these databases, data is stored by key-value pairings, as database tables, or JavaScript Object Notation (JSON) docs.

Using Knowledge Discovery Tools

Using knowledge discovery analytical tools allow companies to isolate and utilize different information to their benefit. Also, these allow them to gather big data, whether structured or unstructured. These data are stored in multiple sources which could be different file systems, APIs, DBMS, or other similar platforms.

Stream Analytics

Stream analytics helps those companies who need to process big data that are stored on various platforms and in different formats because this software is very valuable for filtering, collecting, and analysis of data. Stream analytics permits linking to external sources and their incorporation into the application flow.

The Bottomline

As Forbes has said, “Data Scientists will be the hottest job of the 21st Century”. Data will become a basic necessity for businesses in the years to come. It is predicted that a lot of companies whether big or small, will employ big data and analytical techniques to make deep understanding in order to generally provide even better products and services to their customers.

P.S. The post was written by Sarah McGuire. Sarah McGuire is a Digital Strategist for Local SEO Search Inc., a trusted SEO in Toronto. She takes an in-depth, hands-on approach to startup social business strategy. She has experience in digital marketing, social media, content strategy, and marketing communications.